Whether you’re a newcomer aiming to leverage Google Cloud’s free credits or a seasoned developer looking to optimize your containerized applications, this tutorial provides all the essential knowledge to get your Google Kubernetes Engine cluster up and running in just 10 minutes. Explore various GKE modes, node pool configurations, auto-scaling features, and more to ensure your applications are scalable, resilient, and efficiently managed.

What is Google Kubernetes Engine?

GKE is a Google-managed implementation of the kubernetes open-source container orchestration platform. If you want to run, monitor and scale containerize microservices application in cloud GKE is the ideal choice.

GKE Benefits

- Automatic scaling of nodes based on the number of Pods in the cluster with Autopilot mode or with node auto-provisioning in Standard mode.

- Built in logging and monitoring.

- Automatic upgrades to new kubernetes version.

- Multi-cluster Service capabilities.

- >99% monthly uptime SLA

- In GKE Standard mode, you pay for all resources on nodes, regardless of Pod requests.

If you are new to Google Cloud platform Google Cloud Free Credits – Google Cloud Tutorials refer here to create google free tier account .

Google Cloud Kubernetes Cluster Set Up

Visit https://console.cloud.google.com/ and search for kubernetes service.

Enable Google Kubernetes API

GKE offers the following modes of operation for clusters:

- Autopilot mode (recommended): GKE manages the underlying infrastructure such as node configuration, autoscaling, auto-upgrades, baseline security configurations, and baseline networking configuration.

- Standard mode: You manage the underlying infrastructure, including configuring the individual nodes.

You can’t convert a cluster from Standard to Autopilot after cluster creation.

In this tutorial you learn to set up GKE cluster using standard mode.

In standard mode you are responsible to manager GKE configuration. This includes cluster management, Node pool managements, Node managements etc. You need to plan effectively for compute requirements as compute resources would be charged on each node regardless of whether Pods run on the node.

To ensure optimum level of resource usage is maintained and application up time continuous monitoring of GKE cluster is required.

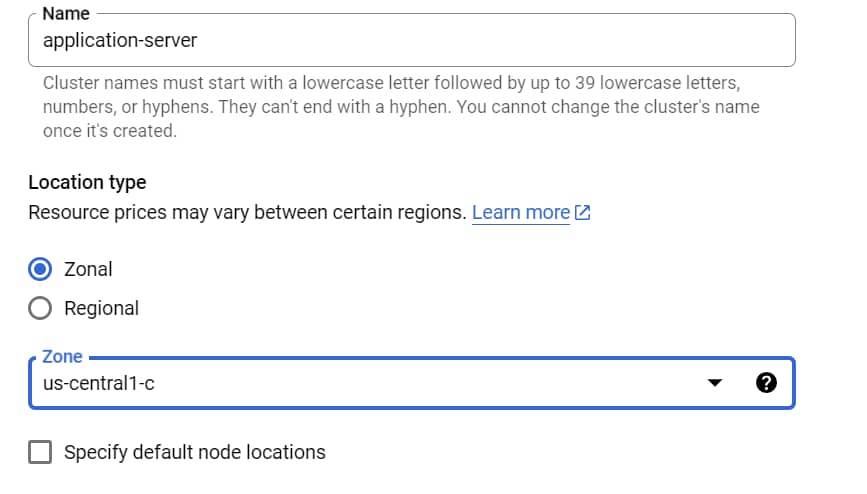

Enter cluster name and select location for your GKE cluster.

Control panes are used to manage workloads on running nodes. A single zone cluster has only one control pane running in one zone.

In multi-zonal cluster multiple nodes runs in different zones but it has only a single replica of control pane.

If you need higher availability for the control plane, consider creating a regional cluster. In regional cluster control panes are replicated across multiple zones in same region ensuring higher availability. This would increase GKE cluster cost.

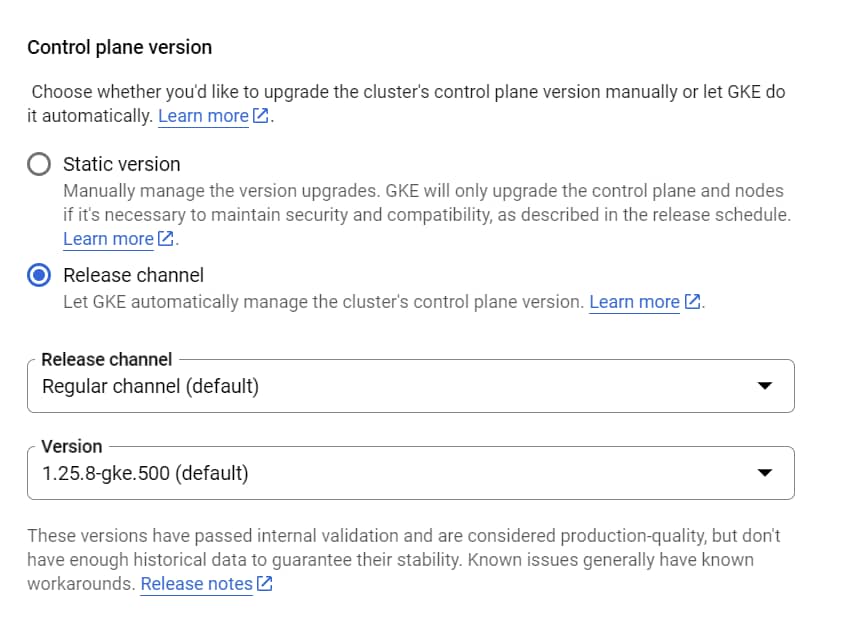

Release channels implement Google Kubernetes Engine (GKE) best practices for versioning and upgrading your GKE clusters.

When you enroll a new cluster in a release channel, Google cloud automatically manages the version and upgrade cadence for the cluster’s control plane and its nodes

Google Cloud Kubernetes Cluster Node Pool configuration

A node pool is a group of nodes within a cluster that all have the same configuration.

When you create node pools and workloads in a GKE cluster, you can define a compact placement policy, which specifies that these nodes or workloads should be placed in closer physical proximity to each other within a zone. Having nodes closer to each other can reduce network latency between nodes, which can be especially useful for tightly-coupled batch workloads.

GKE cluster autoscaler is used to automatically resize the number of nodes in a given node pool as the demand of workloads increases or decreases. When demand is low, the cluster autoscaler scales back down to a minimum size that you have specified. This can increase the availability of your workloads in high demands at the same time managing cost very efficiently. There is no need to manually add or remove node pools or to overprovision at the start.Instead, you specify a minimum and maximum size for the node pool, and the rest is automatic.

Enable cluster auto scaling and specify node size.

GKE offers built-in configurable strategies which determine how the node pool is upgraded.

By default, the surge upgrade strategy is used for node pool upgrades. Surge upgrades use a rolling method to upgrade nodes. This strategy is best for applications that can handle incremental, non-disruptive changes. With this strategy, nodes are upgraded in a rolling window.

The alternative approach is blue green upgrade, where two sets of environments (the original and new environments) are maintained at once, making rolling back as easy as possible.

Configure node settings

If you use GKE Standard, you can choose the operating system image that runs on each node during cluster or node pool creation.

We will use Container-Optimized OS with containerd (cos_containerd), which is the recommended node operating system.

Specify machine family and disk size as per your requirement.

You can configure the maximum number of Pods that can run on a node for Standard clusters using node networking section.

This value determines the size of the IP address ranges that are assigned to nodes on Google Kubernetes Engine (GKE).

Configure cluster settings

Set cluster-level criteria for automatic maintenance, autoscaling, and auto-provisioning.

To configure a maintenance window, you configure when it starts, how long it lasts, and how often it repeats. For example, you can configure a maintenance window that recurs weekly on Monday through Friday for four hours each day.

Configure maintenance exclusions to specify when you don’t want automated version upgrades of the control plane and nodes to occur.

When certain events occur that are relevant to your GKE clusters, such as important scheduled upgrades or available security bulletins, GKE publishes notifications about those events as messages to Pub/Sub topics that you configure

Vertical Pod autoscaling automatically analyzes and adjusts your containers’ CPU requests and memory requests based on the actual resource use of your workloads.

Node auto-provisioning manages the cluster’s node pools by creating and deleting node pools as needed based on workload needs.

Cluster networking is used to define how applications in this cluster communicate with each other and with the Kubernetes control plane, and how clients can reach them.

Review all configuration and click on create cluster.

Next step is to run microservices on GKE.

Learn more about running containers in Google Kubernetes here Deploy Microservices On Google Kubernetes Engine (GKE) In 8 Easy Steps – Google Cloud Tutorials

FAQ – Google Cloud Kubernetes Cluster

How to create kubernetes cluster in google cloud ?

kubernetes Cluster in google cloud can be set up in 2 modes using auto pilot or standard mode. In auto pilot mode google cloud manages cluster configuration and it is recommended mode if you are beginner.

how to setup kubernetes cluster on google cloud ?

Refer tutorial to setup kubernetes cluster on google cloud in standard mode Easily Set Up Google Cloud Kubernetes Cluster Standard Mode In 10 Minutes – Google Cloud Tutorials

google cloud kubernetes cluster pricing ?

For detailed pricing on using GKE services refer Google Cloud Pricing Calculator